CNA Statement to UN Group of Government Experts on Lethal Autonomous Weapon Systems, August 29 2018

This statement was made by CNA’s Director of its Center for Autonomy and AI to the UN’s Group of Government Experts (GGE) on Lethal Autonomous Weapon Systems (LAWS) in Geneva during its August 27-31 2018 meeting.

Ambassador Gill, thank you for your leadership in such a complex and critical issue, which is at the heart of the future of war. I have been part of these discussions since 2016, first as a diplomat and now as a scientist. Overall, we have seen some progress in the past few years. I point out the UK and Dutch positions which illuminate a broader framework for human control over the use of force.

Yet it is clear that there is a fundamental disagreement in this body on the way forward. Some have said today that this is because some States are stalling. But I offer another explanation.

Einstein said this: “If I had an hour to solve a problem, I would spend 55 minutes thinking about the problem and 5 minutes thinking about solutions.” I believe this wisdom applies here. We have not had more progress in the past few years because we have not sufficiently defined the problem. States and other groups are still talking past one another.

One example. There are two types of AI: general and narrow. General AI is often described as an intelligent or superintelligent type of AI that solves many types of problems and does not, and may never, in fact exist. Narrow AI is a machine performing specific pre-programmed tasks for specific purposes. This is the type of AI we see in use today in many applications. Those two types of AI carry very different risks. When we fail to discriminate between the two types of AI in our discussions, we talk past one another and cause confusion. Framing this discussion around narrow AI – the kind of technology that is actually available to us now and in the near future – would help us to focus on the specific risks of AI and autonomy that need to be mitigated.

That is one example, and there are others. I discuss a number of such risks in CNA’s new report, AI and Autonomy in War: Understanding and Mitigating Risks.

Finally, there is much discussion of civilian casualties in this forum. It is surprising that, as a Group of Government Experts, we have not explored this particular problem more in depth. Such exploration is very possible. Having led many studies on how civilian casualties occur in military operations, there is much we as a group can learn about risks to civilians, how autonomy can introduce specific risks, and how technology can mitigate risk.

Overall, I believe we can learn from Einstein: this is a problem we can solve, but we still have work to do to adequately frame the problem.

Efforts to develop AI in the Russian military

This blog entry is written by CNA Russia Program team member Sam Bendett to highlight the current Russian efforts to develop artificial intelligence.

On September 1, 2017, Russian President Vladimir Putin drew attention of the international high-tech and military community by stating that the country that gets to master artificial intelligence (AI) will “get to rule the world.” He also remarked that artificial intelligence is “humanity’s future.” Although the size and scale of overall investment on AI development in Russia is small relative to American or Chinese efforts, Russia’s private sector spending in this field is projected to increase significantly in the coming years. Russia may represent only a fraction of global investment in developing AI, but the government is seeking to marshal national resources to make the country one the “AI superpowers” of the future.

The majority of Russian AI development plans are long-term, with intellectual and technical capital organized into several lines of effort. Many of these projects are done under the auspices of the Russian Ministry of Defense (MOD) and its affiliate institutions, research centers, and industrial conglomerates. Several efforts merit closer attention – they may determine whether the government proves successful in engaging the national resource base for breakthroughs in Artificial Intelligence:

- The Foundation for Advanced Studies (FAS – Фонд перспективных исследований (ФПИ)) was established in October 2012 by presidential decree to serve as Russia’s equivalent to DARPA (Defense Advanced Research Project Agency). The purpose of the Foundation is to “promote the implementation of scientific research and development in the interests of national defense associated with developing and creating innovative technologies.” FAS scientists and researchers are already working on several artificial intelligence and unmanned technology projects.

- The “Era” military innovation technical city, or “technopolis,” was recently established on the Russian Black Sea coast. This technopolis will be financed by attracting funds from the Foundation for Advanced Studies, as well as from the private investors and enterprises and scientific organizations of the country’s military-industrial base, with the aim to develop defense technologies and innovations, including AI. One of “Era”’s defining characteristics would be its attempt at flexible, start-up style development with the aim of achieving technological results quickly and efficiently.

- The MOD, together with other Russian government agencies, is hosting annual forums titled “Artificial Intelligence: Problems and Solutions” that aim to discuss domestic AI developments and review international achievements in that field. Defense Minister Sergei Shoigu has called for Russian civilian and military designers to join forces to develop artificial intelligence technologies to “counter possible threats in the field of technological and economic security of Russia.” This initiative’s most notable result is the publication of the ten-step recommendation “roadmap” for expanding AI research in Russia that outlines public-private partnerships and short to mid-term developments such as education, the establishments of various technical standards, along with specific tasks for the country’s military. One of the roadmap’s most important proposals is to establish a National Center for Artificial Intelligence (NCAI), which would provide a national focus for the use of AI. The NCAI could assist in the “creation of a scientific reserve, the development of an AI innovative infrastructure, and the implementation of theoretical research and promising projects in the field of artificial intelligence and IT technologies.” The Russian Academy of Sciences joined the Foundation for Advanced Studies in putting forth the proposal for the creation of this new center.

Currently, the Russian military is working on incorporating elements of AI in its electronic warfare, missile, aircraft and unmanned systems technologies, with the aim of making battlefield decision-making and targeting faster and more precise. Russian policy makers and military designers are working on integrating elements of AI in unmanned swarm, counter-UAS, and radar warning systems to bolster the nation’s security. In the civilian sector, Russian AI work is focused on image and speech recognition, as well as neural networks and machine learning – achievements that may also be incorporated into the military down the line. The MOD is looking to use AI in data and imagery collection and analysis, seeking to gain certain advantages in the speed and quality of information processing. Another defining characteristic of the current Russian AI efforts is the relative absence of ethics discussion related to the use of artificial intelligence, as the military and society appear to be in agreement that achieving pragmatic results first is paramount to other considerations.

The Russian military’s effort to develop AI has medium to long term implications for the US and its partners. Today, the Russian government and its military are trying new and innovative approaches to advanced technology developments that may yield results relatively quickly, as the Russian President and the MOD are in sync when it comes to understating the need for their nation’s military and industry to improve qualitatively. Developing Artificial Intelligence is one of the ways Moscow intends to compete with Washington as equals – an effort that merits close and deliberate attention.

Russian kryptonite to Western hi-tech dominance

Russian state corporation “Russian Technologies (Rostec)”, with extensive ties to the nation’s military-industrial complex, has overseen the creation of a company with the ominous name – “Kryptonite” will work on creating “civilian IT products based on military developments in information security, including blockchain.” Specifically, Kryptonite’s work will involve “cryptography, machine learning, Big Data, quantum computing, blockchain and the security of telecommunications standards.”

This is interesting due to a fact that when it comes to such key information technology concepts, it’s the Russian state sector that is leading in research and development (R&D), not the civilian sector. In fact, as the announcement indicates, the military achievements will now be available to the Russian private sector, presumably aiming for eventual wide-spread domestic and international use. Russian Ministry of Defense (MOD) has made no secret of its desire to achieve technological breakthroughs in IT and especially artificial intelligence, marshalling extensive resources for a more organized and streamlined approach to information technology R&D. In fact, MOD is overseeing a significant public-private partnership effort, calling for its military and civilian sectors to work together on information technologies, while hosting high-profile events aiming to foster dialogue between its uniformed and civilian technologists.

Kryptonite’s work is reminiscent of the eventual outflow of the American military information technologies to the civilian space during the Cold War, the Internet and cellular phone being most prominent examples. It also brings to mind the Soviet technological experience, when the military and security services had access to the nation’s best and brightest minds and talent, along with the first pick of major resources needed for advanced technology development. The eventual drawback was that such Soviet achievements were not eventually transferred to the civilian consumer sector that could have definitely benefited from the large-scale domestic adoption of such technologies. MOD’s current work on IT and advanced technologies is aiming to compensate for the lack of funding and development following the dissolution of the USSR and the tumultuous and cash-poor 1990s and early 2000s, when Russia imported numerous Western technologies for domestic and military use. Such policies created an uncomfortable dependence today that the MOD is currently aiming to overturn. While such efforts are at their beginning stages, there is reason to assume that today’s Russian military establishment is willing to experiment with a variety of technological approaches to develop domestic know-how and deliver it to the eventual consumer, military or civilian. One such approach is the creation of an “Era” “technopolis” that will work on various advanced technologies, including IT, blockchain and artificial intelligence – the effort is already underway and will be officially opened in September 2018.

Kryptonite’s importance as a military-civilian technological nexus was underscored by “Rostec” leadership, which stated that “…it is obvious that Russian military developments in information security have great potential for civilian use, including hi-tech exports. The creation of (this) joint venture is an excellent example of public-private partnership, and consolidation of efforts will increase synergy…while developing (IT) market competencies, as well as by incorporating the scientific and technical potential accumulated by Russia’s defense industry.” To achieve that, Kryptonite will work to “attract investments in order to commercialize innovative solutions and technologies created by the Russian defense industry’s enterprises and research institutes, as well as develop its own information security and Big Data competencies.”

As far as the company name – that probably wasn’t picked by accident. Russian government has long expressed concern that their reliance on imported IT products creates major security vulnerabilities. Developing domestic information technologies will help overcome that, while at the same time allowing Russian technology sector to eventually complete with American, Western and Asian hi-tech leaders. This technology race is only expected to accelerate – and Russian achievements merit close attention.

Russian kryptonite to Western hi-tech dominance

Russian state corporation “Russian Technologies (Rostec)”, with extensive ties to the nation’s military-industrial complex, has overseen the creation of a company with the ominous name – “Kryptonite” will work on creating “civilian IT products based on military developments in information security, including blockchain.” Specifically, Kryptonite’s work will involve “cryptography, machine learning, Big Data, quantum computing, blockchain and the security of telecommunications standards.”

This is interesting due to a fact that when it comes to such key information technology concepts, it’s the Russian state sector that is leading in research and development (R&D), not the civilian sector. In fact, as the announcement indicates, the military achievements will now be available to the Russian private sector, presumably aiming for eventual wide-spread domestic and international use. Russian Ministry of Defense (MOD) has made no secret of its desire to achieve technological breakthroughs in IT and especially artificial intelligence, marshalling extensive resources for a more organized and streamlined approach to information technology R&D. In fact, MOD is overseeing a significant public-private partnership effort, calling for its military and civilian sectors to work together on information technologies, while hosting high-profile events aiming to foster dialogue between its uniformed and civilian technologists.

Kryptonite’s work is reminiscent of the eventual outflow of the American military information technologies to the civilian space during the Cold War, the Internet and cellular phone being most prominent examples. It also brings to mind the Soviet technological experience, when the military and security services had access to the nation’s best and brightest minds and talent, along with the first pick of major resources needed for advanced technology development. The eventual drawback was that such Soviet achievements were not eventually transferred to the civilian consumer sector that could have definitely benefited from the large-scale domestic adoption of such technologies. MOD’s current work on IT and advanced technologies is aiming to compensate for the lack of funding and development following the dissolution of the USSR and the tumultuous and cash-poor 1990s and early 2000s, when Russia imported numerous Western technologies for domestic and military use. Such policies created an uncomfortable dependence today that the MOD is currently aiming to overturn. While such efforts are at their beginning stages, there is reason to assume that today’s Russian military establishment is willing to experiment with a variety of technological approaches to develop domestic know-how and deliver it to the eventual consumer, military or civilian. One such approach is the creation of an “Era” “technopolis” that will work on various advanced technologies, including IT, blockchain and artificial intelligence – the effort is already underway and will be officially opened in September 2018.

Kryptonite’s importance as a military-civilian technological nexus was underscored by “Rostec” leadership, which stated that “…it is obvious that Russian military developments in information security have great potential for civilian use, including hi-tech exports. The creation of (this) joint venture is an excellent example of public-private partnership, and consolidation of efforts will increase synergy…while developing (IT) market competencies, as well as by incorporating the scientific and technical potential accumulated by Russia’s defense industry.” To achieve that, Kryptonite will work to “attract investments in order to commercialize innovative solutions and technologies created by the Russian defense industry’s enterprises and research institutes, as well as develop its own information security and Big Data competencies.”

As far as the company name – that probably wasn’t picked by accident. Russian government has long expressed concern that their reliance on imported IT products creates major security vulnerabilities. Developing domestic information technologies will help overcome that, while at the same time allowing Russian technology sector to eventually complete with American, Western and Asian hi-tech leaders. This technology race is only expected to accelerate – and Russian achievements merit close attention.

Video: the Latest on Russia’s Unmanned Vehicle Program

Samuel Bendett, a research analyst in the Center for Naval Analyses’ Russia Studies Program and a fellow in Russia studies at the American Foreign Policy Council, discusses the buzz surrounding Russia’s Hunter unmanned aerial vehicle, the failure of its Uran-9 unmanned ground vehicle program following secret combat testing in Syria, the outlook for Russian submarine and unmanned underwater vehicle innovation, and more during a June 27, 2018, interview with Defense & Aerospace Report Editor Vago Muradian in Washington. Check out the video here.

Redefining Human Control: Event at the UN’s Meeting on Lethal Autonomy

Last week, the UN again took up the issue of lethal autonomy in its Group of Government Experts on Lethal Autonomous Weapon Systems (or LAWS), a meeting of the Convention on Certain Conventional Weapons (CCW). Over the past few years, there has been a growing consensus within the CCW that human involvement in the targeting process is a solution to the risks posed by LAWS. With this consensus, the question then becomes: what is the precise nature of this human involvement? Often this involvement is described in terms of the decision to use the system, akin to a finger on the trigger. Thus human involvement becomes a human making the decision to pull the trigger.

An event at last week’s GGE argued that this view is too narrow. Entitled “The Human-Machine Relationship: Lessons From Military Doctrine and Operations,” the event was organized by CNA’s Center for Autonomy and AI and the University of Amsterdam. The event was attended by officials and diplomats, including the ambassador to the Netherlands. Dr. Lewis, CAAI Director, was joined by Merel Ekelhof, a Ph.D. researcher at the VU University of Amsterdam, and U.S. Air Force Lt. Col. Matt King.

Merel Ekelhof is known to the UN community as the person to first introduce the idea of human involvement spanning across the entire military targeting cycle, introducing the idea back in 2016. The event took that idea and showed specific ways which this human involvement can take. Lt Col King discussed legal requirements for the use of force and how this was satisfied in his personal involvement in targeting decisions in the Coalition Air Operations Center in Qatar in 2017. Then Dr. Lewis walked the group through several real world examples of incidents involving fratricide and civilian casualties, how humans are not infallible, and how broader human involvement outside of the trigger pull decision can create a safety net that can reduce the risks of autonomous systems.

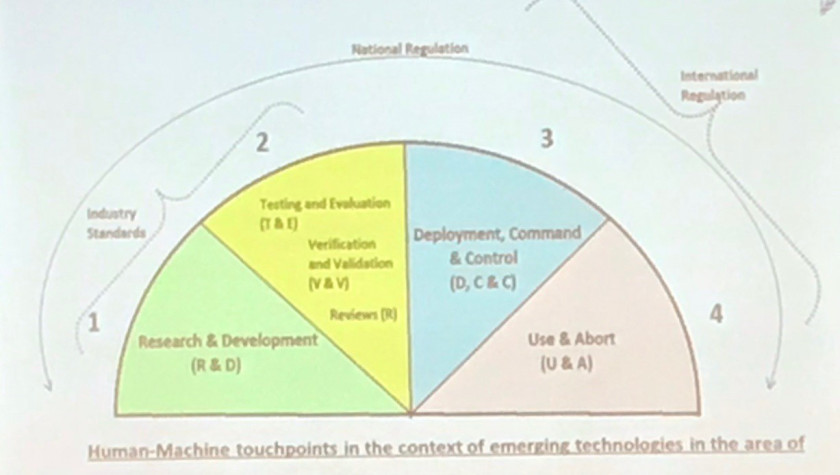

As the meeting closed, Ambassador Gill (presiding over the GGE) suggested a model for human involvement that takes on this view of broader human involvement in the use of lethal force. The below picture (dubbed the “sunrise” chart by the UK delegation) shows his initial thoughts in this regard. CAAI is developing a follow-on report for the next GGE to be available at the end of August, where we will provide additional details and observations consistent with this broader view. Stay tuned!

For more information, see the CAAI report: Redefining Human Control.

Russia Races Forward on AI Development

The recent 2017 statement by Russian President Vladimir Putin that whoever masters AI will get to rule the world should be interpreted as a recognition of Russia’s current place in this unfolding technology race, and of the need by the nation’s government, private sector, and the military to marshal the needed resources to persevere in this domain. This is already beginning to happen. The Russian government is increasingly developing and funding various AI-related projects, many under the auspices of the Ministry of Defense and its affiliated institutions and research centers. All this government activity has apparently infused many Russian developers with new confidence. Indeed, some are claiming that AI may arrive in just a few years’ time. It is even engendering hope that the country might at long last develop an infrastructure for turning theoretical knowledge, long the strength of its scientific community, into practical solutions. There are in fact practical developments in the Russian military-industrial complex that seek to incorporate certain AI elements in existing and future missile, aircraft, electronic warfare (EW), unmanned systems, and other tech. Russians also expect AI to help automate the analysis of satellite imagery and radar data, by quickly identifying targets and picking out unusual behavior by a enemy ground or airborne forces.

However, based on the available evidence, Western militaries need not be immediately alarmed about the arrival of AI-infused Russian weapons with next-generation capabilities — except, perhaps, in the field of EW. Western and Chinese efforts are currently well ahead of Russian initiatives, in terms of funding, infrastructure, and practical results. But the Russian government is clearly aiming to marshal its existing academic and industrial resources for AI breakthroughs — and just might achieve them. Read more here: Defense One

For the first time, Russia is showcasing unmanned military systems at a military parade

On April 18, 2018, Russian Defense Minister Sergei Shoigu announced that this year’s military parade in Moscow that commemorates the Soviet victory over Nazi Germany in WWII will feature new and advanced weaponry. Specifically, he noted that for the first time ever, “the Uran-9 combat multifunctional robotic system, the Uran-6 multipurpose mine-clearance robotic vehicle and Korsar short-range drones” will be showcased along other land and air weapons.

This announcement is momentous. Victory Day parades are back in fashion in Russia, after a brief hiatus from the annual military pageantry of the Soviet days. At the parade itself, the latest and legacy technologies are displayed – from WWII-era tanks to the latest combat vehicles, missiles and airplanes. All technology displayed on parade was/is in regular use, so it was probably just a matter of time before Russians started showing off their unmanned military systems.

Over the past several years, Russian Federation has made great strides in developing a wide variety of unmanned aerial, ground and sea/underwater vehicles. Of these, the unmanned aerial vehicles (UAVs) have seen extensive use in Russian operations, along with a growing number of unarmed ground vehicles (UGV) for demining and ISR missions. That is why Russia’s choice to display these particular unmanned systems is so interesting – of the three vehicles named, only unarmed Uran-6 has seen actual operational use, most notably in Syria. Uran-9 has been undergoing testing and evaluation by the Russian Ministry of Defense, and this particular UGV, given its PR-ready look of a small-scale tank, has been shown extensively at various domestic and international exhibitions. Moreover, Korsar UAV is a virtually unknown vehicle – back in 2015-2016, there were announcements that its production would commence in 2017 – however, the manufacturer of this UAV did not seem to start mass production, which is presumed to start this year. In fact, Russia operates an entire flotilla of UAVs that have seen extensive operational use in Ukraine and Syria – short-range Eleron-3, longer-ranged Orlan-10(the most numerous UAV in the Russian military), and long-range Forpost (itself a licensed copy of an Israeli Searcher UAV).

There are other smaller UAVs in Russian service that have been growing in numbers and importance as key mission multipliers for the Russian forces. The absence of these battle-tested and available UAVs is curious, in light of the actual decision to showcase unmanned systems in the first place. On the UGV side, while Russian military in Syria used numerous UGVs for ISR and demining, most were small and may not make for good exhibition owning to their size. Still – Russian military is in fact evaluating two mid-sized UGVs that have underwent extensive trials and are ready to be incorporated into actual use – armed “Soratnik” and “Nerehta.” The absence of these two vehicles from May 9 parade is also curious, given extensive publicity they were getting over the past 24 months. Additionally, Russians have already showcased “Platforma-M” small guard UGV at May 9 Victory parades in Kaliningrad as far back as 2014, and will do so this year as well.Finally, if all the selected unmanned systems would be shown on top of military trucks – instead of a potentially more crowd-pleasing movement on their own – it’s also interesting that another key unmanned systems will be absent on that day – Orion-E long-range UAV that was unveiled with great fanfare at last year’s military exhibition.

In the end, it’s up to Moscow to select what its citizens and the international community will be seeing during the parade. The issues concerning the selection of one particular unmanned system over another may have to do with logistics, internal politics or other factors. Still, selecting an unknown UAV over several others that have proven themselves in service is a curious decision. Perhaps Russia is saving these other unmanned vehicles for future parades. And speaking of which – Moscow is in fact a latecomer to showcasing UAS in such a setting. Belarus, Kazakhstan, Armenia, Azerbaijan, China and Iran are among the growing number of nations displaying domestic and imported unmanned military systems on military parades. May 9 is definitely not the last time Russia will showcase its unmanned military systems – given how many resources it is dedicating to their design, production and eventual use.

What is this blog about?

CNA launched the Center for Autonomy and Artificial Intelligence (CAAI) as a direct result of the rapid, even explosive, growth in AI in recent years. Recall that it was less than two years ago (in the spring of 2016) that Google’s DeepMind‘s AlphaGo Go-playing AI defeated multiple world-champion Go player Lee Sedol in a landmark event that – prior to the match, and given Go’s complexity compared to chess – most AI experts believed was years (perhaps decades) in the future. Now, consider the fact that as remarkable as AlphaGo’s defeat of Lee Sedol was, it arguably pales in comparison with the advances made to AlphaGo‘s learning algorithm in the months following that landmark match. A research paper received by the prestigious peer-reviewed journal Nature in April 2017 (and subsequently published online on 18 October 2017) announced that a new Go playing AI called AlphaGo Zero achieved superhuman performance, winning 100–0 against AlphaGo. Moreover, this new machine learning algorithm accomplished this by starting tabula rasa (i.e., entirely through self-play, without any human-based gaming knowledge or data) and did so in roughly 3 days (compared to the several-months-long training required by the original AlphaGo)!

How might AI evolve in the months and years ahead, and what are the military implications of this “technology”? CAAI has been stood up to address this basic question (see the first batch of papers and reports that the Center has published since its launch), and this blog is a place for commentary on AI, machine learning, and autonomy, and as a general forum for public discussion of ongoing projects. As such, it is also intended as a complement, of sorts, to another CAAI initiative, namely a weekly series of 20-30 min long podcasts called AI with AI that explore the latest breakthroughs in artificial intelligence and autonomy. Where the podcasts have only enough time to mention and outline a few of the “most interesting” news items that caught the ears and eyes of the editors in-between their recording sessions, this blog provides an opportunity to expand on one or more major stories, to provide additional background, and/or to link directly to original sources. This blog will also tap heavily into CNA’s repository of in-house experts on autonomy, AI, and drones; cyber security; defense technology; and other related areas. All visitors to our blog are encouraged to participate in the discussion with their own news, comments, and speculations about how AI and autonomy might evolve.

CNA’s New Center for Autonomy and AI

In Oct 2017, CNA launched its new Center for Autonomy and Artificial Intelligence (CAAI), with Dr. Larry Lewis named as Director.

Throughout history, the ability to adapt technological advances to warfighting has led to fundamental changes in how war is conducted. Examples include the development of the crossbow, gunpowder-powered projectile weapons, rockets and jet aircraft, and precision guided munitions. Autonomy and AI represent revolutionary technologies that will change the future of warfare. They offer opportunities to the U.S. for countering and deterring emerging threats, addressing security challenges and advancing U.S. national interests.

But this opportunity is by no means certain, since autonomy also offers potential asymmetric advantages to peer-competitors, some of which have been pursuing these capabilities aggressively. Additionally, rapid innovation in the private sector—including commercial research and development efforts in autonomy and AI that dwarf that of the U.S. military—creates challenges for the U.S. to quickly identify and integrate cutting edge technological developments. Finally, there are potential dangers of this technology that should be guarded against, including potential unpredictability and the potential of civilian casualties in some operational contexts. Though much of the emerging technology is new, there are still many opportunities to avoid challenges and missteps that have been seen before, by learning from past lessons observed in U.S. operations and institutional processes.

Given the impact autonomy and AI will have on the character of warfare, CNA created CAAI to focus on these emerging technologies and their contribution to national security. The Center capitalizes on the ability to leverage over 250 experienced researchers, many with advanced degrees and holding wide expertise and experience in a spectrum of disciplines, and connect them with government, private industry, and other stakeholders. The Center combines CNA’s strengths and experience in military operations with focused expertise in autonomy and AI. Its goal is to provide technical, strategic and operational insights and connect government and private stakeholders to make sure the U.S. is at the forefront in this rapidly evolving area.